- and that’s from a self-proclaimed fan of peer review.

For teaching librarians, the problems with peer review have a particularly troubling dimension because we spend so much of our time telling students of the vital need to evaluate information for quality, reliability, validity and authority. We stress the importance of using scholarly sources over open web ones. What’s more our discovery services even have a little tickbox that limits searches to peer reviewed articles, because they’re the ones you can rely on. Right? …

So what do we do if peer review fails to act as the guarantee of scholarly quality that we expect and need it to be? Where does it leave us if “peer review is a joke”?

The purpose of peer review

From my point of view as a journal editor, peer review is far from being a joke. On the contrary, it has a number of very useful functions:

· It lets me see how the article will be received by the community

The reviewers act as trial readers who have certain expectations about the kind of material they’re going to find in any given journal. This means I can get an idea of how relevant the work is to the journal’s audience, and whether this particular journal is the best place for it to appear and be appreciated.

· It tests the flow of the argument

Because peer reviewers read actively and critically, they are alert to any breaks in the logical construction of the work. They’ll spot any discontinuities in the argument, any assumptions left unquestioned, and any disconnection between the method, the results and the conclusions, and will suggest ways to fix them.

· It suggests new literature or different viewpoints that add to the research context

One of the hardest aspects of academic writing is reconciling variant views on a topic, but a partial – in any sense – approach does no service to research. Every argument will have its counter-position, just as every research method has its limitations. Ignoring these doesn’t make them go away; it just makes for an unbalanced article. Reviewers can bring a complementary perspective on the literature that will make for a more considered background to the research.

· It helps refine and clarify a writing style which is governed by rigid conventions and in which complex ideas are discussed

If you’ve ever written an essay, you’ll know that the scholarly register can work a terrible transformation on our ability to articulate things clearly. The desire to sound objective, knowledgeable, or just plain ‘academic’ can completely obscure what we’re trying to say. When this happens (and it does to us all) the best service anyone can do is to ask (gently) “What the heck does this mean?”

In my journal’s guidelines for authors and reviewers we put all this a bit more succinctly:

The role of the peer reviewer is twofold: Firstly, to advise the editor as to whether the paper is suitable for publication and, if so, what stage of development it has reached. [ ….] Secondly, the peer reviewer will act as a constructively critical friend to the author, providing detailed and practical feedback on all the aspects of the article.

But you’ll notice that these functions aren’t to do with the research as such, but with the presentation of the research. Scholarly communication always, necessarily, happens after the fact. It’s worth remembering that the reviewers weren’t there when the research was designed, or when the participants were selected, or when the audio recorder didn’t work properly, or the coding frame got covered in coffee stains. The reviewers aren’t responsible for the design of the research, or its outputs: all they can do is help authors make the best possible communication of the work after the research process itself is concluded.

Objective incredulity

Despite this undeniable fact, many of the “it’s a joke” articles seem to suggest that reviewers should take personal responsibility for the bad datasets, the faulty research design, or the inflated results. However, you can’t necessarily locate and expose those problems on reading alone. The only way to truly test the quality and validity of a research study is to replicate it.

Replication - the principle of reproducibility - is the whole point of the scientific method, which is basically a highly refined and very polite form of disbelief. Scholarly thinking never accepts assertions at face value, but always tests the evidence and asks uncomfortable, probing questions: is that really the case? Is it always the case? Supposing we changed the population, the dosage, one of the experimental conditions: what would the findings, and the implications we draw from them, look like then?

And here’s the nub of the whole problem: it’s not the peer reviewer’s job to replicate the research and tell us whether it’s valid or not. It’s our job - the job of the academic community as a whole, the researcher, the reader. In fact, you and me. Peer reviewers can’t certify an article as ‘true’ so that we know it meets all those criteria of authority, validity, reliability and the rest of them. All a reviewer can do is warrant that the report of a study has been composed in the appropriate register and carries the signifiers of academic authority, and that the study itself - seen only through this linguistic lens - appears to have been designed and executed in accordance with the methodological and analytical standards of the discipline. Publication in a peer-reviewed journal isn’t a simple binary qualifier that will tell you whether an article is good or bad, true or false; it’s only one of many nuanced and contextual evaluative factors we must weigh up for ourselves.

So when we talk to our students about sources and databases, we should also talk about peer review; and when we talk about peer review, we need to talk about where the authority for deciding whether something is true really rests.

Tickboxing truth

This brings us to one of the biggest challenges about learning in higher education: the need to rethink how we conceive of truth.

We generally start out by imagining that the goal of research is to discover the truth or find the answer - as though ‘Truth’ is a simple, singular entity that’s lying concealed out there, waiting to be for us to unearth it. And many of us experience frustration and dismay at university as a direct result of this way of thinking. We learn, slowly, that the goal of a research study is not to ‘find out the truth’, nor even to find out ‘a’ truth. It’s to test the validity of a hypothesis under certain conditions. Research will never let us say “This is what we know”, but only “This is what we believe - for now”.

Research doesn’t solve problems and say we can close the book on them. Rather it frames problems in new ways, which give rise to further questions, debate, discussion and further research. Occasionally these new ways of framing problems can painfully disrupt our entire understanding of the world. Yet once we understand that knowledge is a fluid construct created by communities, not a buried secret waiting for us to discover, then we also come to understand that there can be no last word in research: it is, rather, an ongoing conversation.

The real problem with peer review is that we’ve elevated it to a status it can’t legitimately occupy. We’ve tried to turn it into a truth guarantee, a kind of kitemark of veracity, but in doing so we’ve shut our eyes to the reality that truth in research is a shifting and slippery beast.

Ultimately, we don’t get to outsource evaluation: it’s up to each one of us to make the judgement on how far a study is valid, authoritative, and relevant. As teaching librarians, it’s our job to help our learners develop a critical mindset - that same objective incredulity that underlies scientific method, that challenges assertions and questions authority. And that being so, it’s imperative that we not only foster certain attitudes to information in our students, but model them ourselves in our own behaviour. In particular, our own approach to information should never be a blind acceptance of any rubber-stamp, any external warrant, any authority - no matter how eminent.

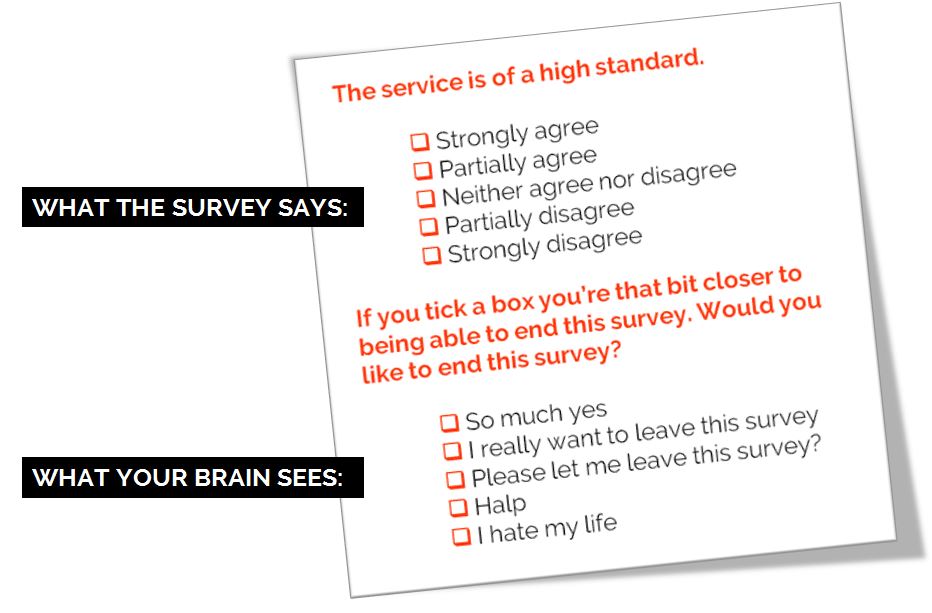

This means that the little tickbox that says ‘peer reviewed’ may be the greatest disservice we do to the thoughtful scepticism we seek to help develop in our students, and in our society at large. Encouraging people to think that the job of assessing quality happens somewhere else, by someone else, leads to a populace which is alternatively complacent and outraged, and in both states unwilling to undertake the critical engagement with information that leads us to be able to speak truth to power.

The only joke is to think that peer review can stand in for that.